In this final article for the series, we will break down the terraform code used to create the

The NSOT module uses a service we have not discussed yet referred to as an ASG or Autoscaling group. An Auto Scaling group contains a collection of EC2 instances that share similar characteristics and are treated as a logical grouping for the purposes of instance scaling and management. For example, if a single application operates across multiple instances, you might want to increase the number of instances in that group to improve the performance of the application, or decrease the number of instances to reduce costs when demand is low.

You can use the Auto Scaling group to scale the number of instances automatically based on criteria that you specify, or maintain a fixed number of instances even if an instance becomes unhealthy. This automatic scaling and maintaining the number of instances in an Auto Scaling group is the core functionality of the Amazon EC2 Auto Scaling service. In our case, we will be passing parameters using the code below to the

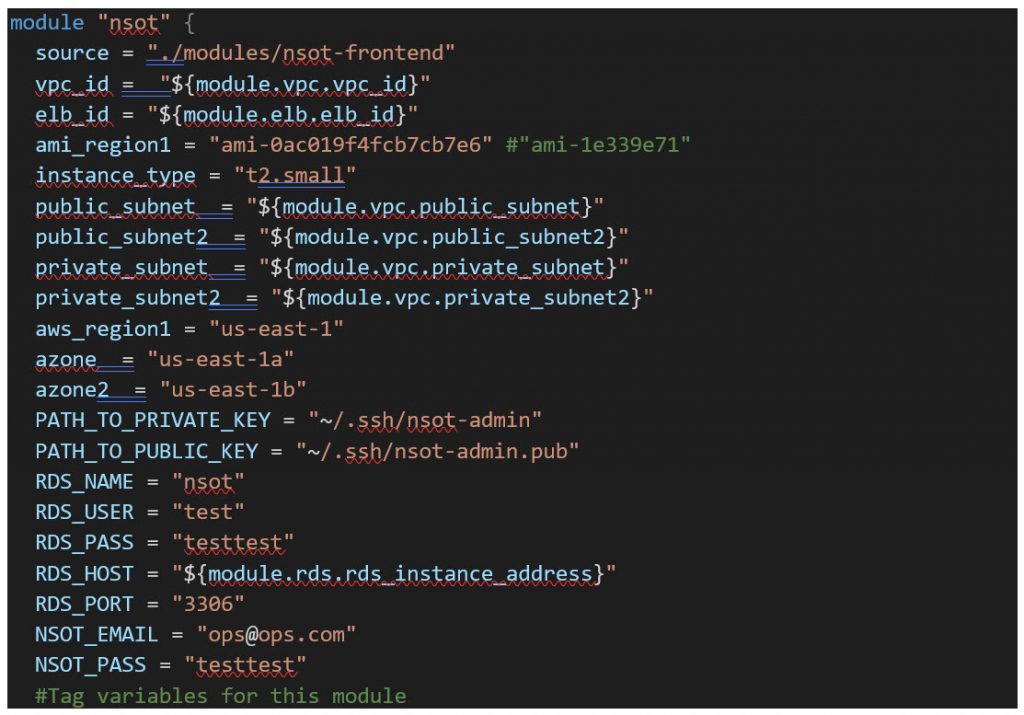

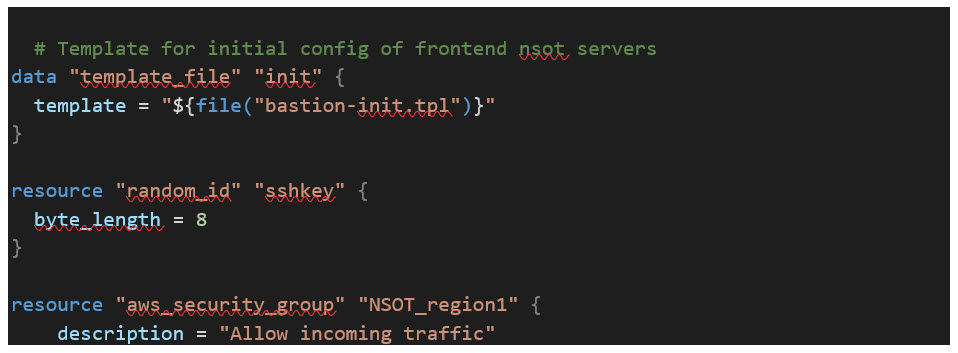

First, we will be using the terraform data template_fileresource to pass variables to the config init script, which is then rendered in the launch configuration resource to add the base config to the

Modules/nsot-frontend/main.tf

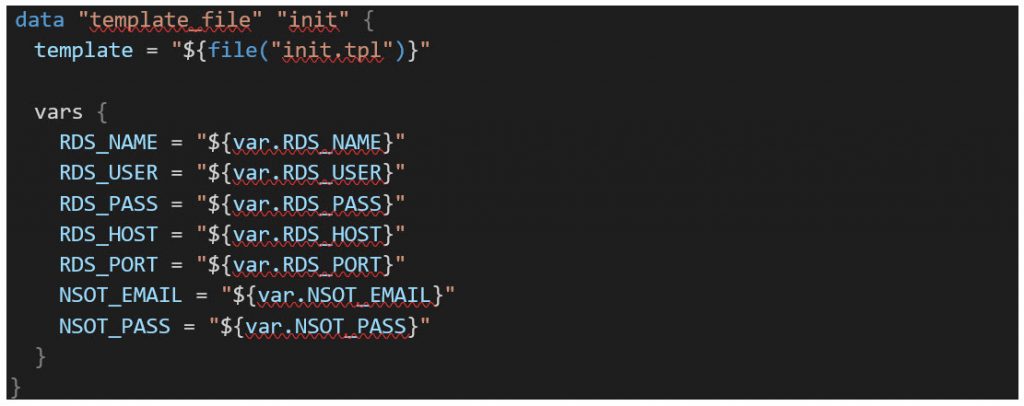

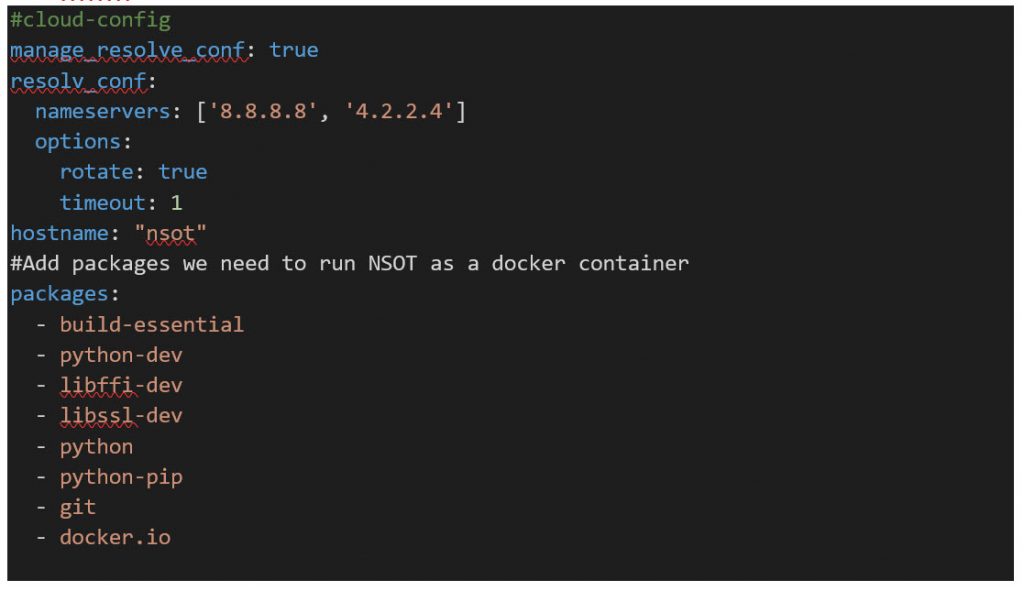

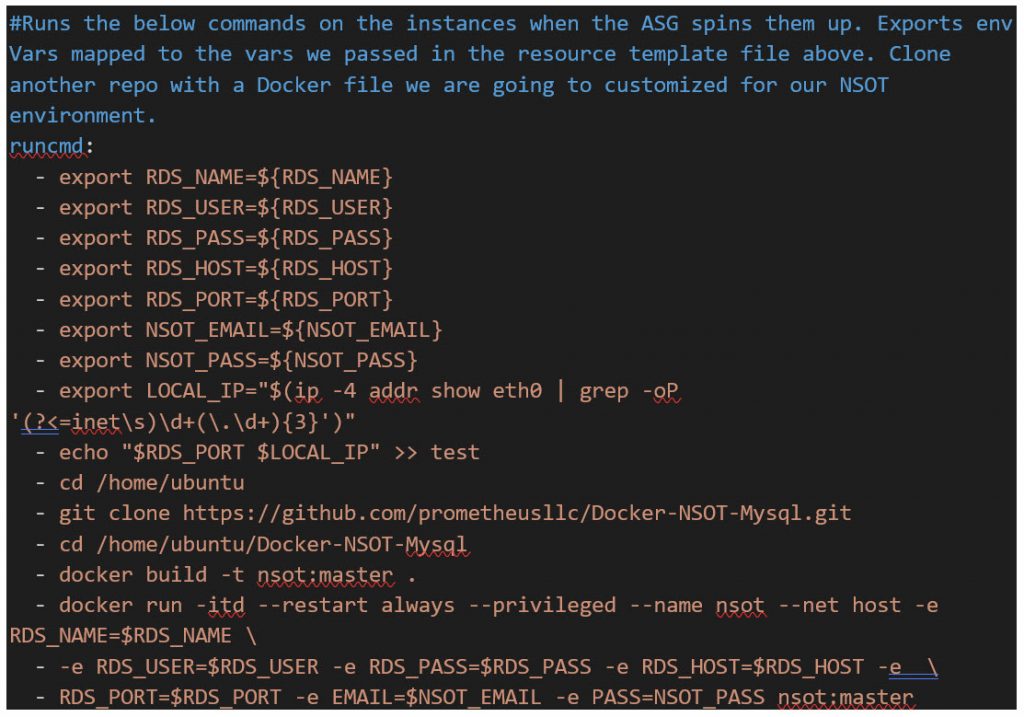

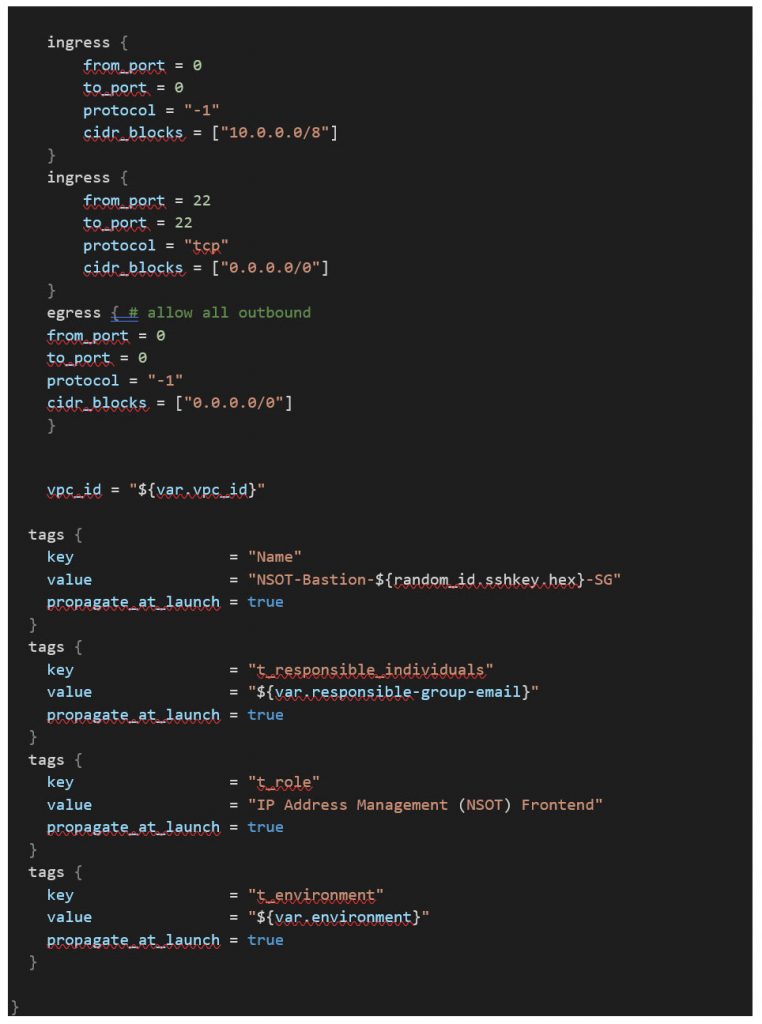

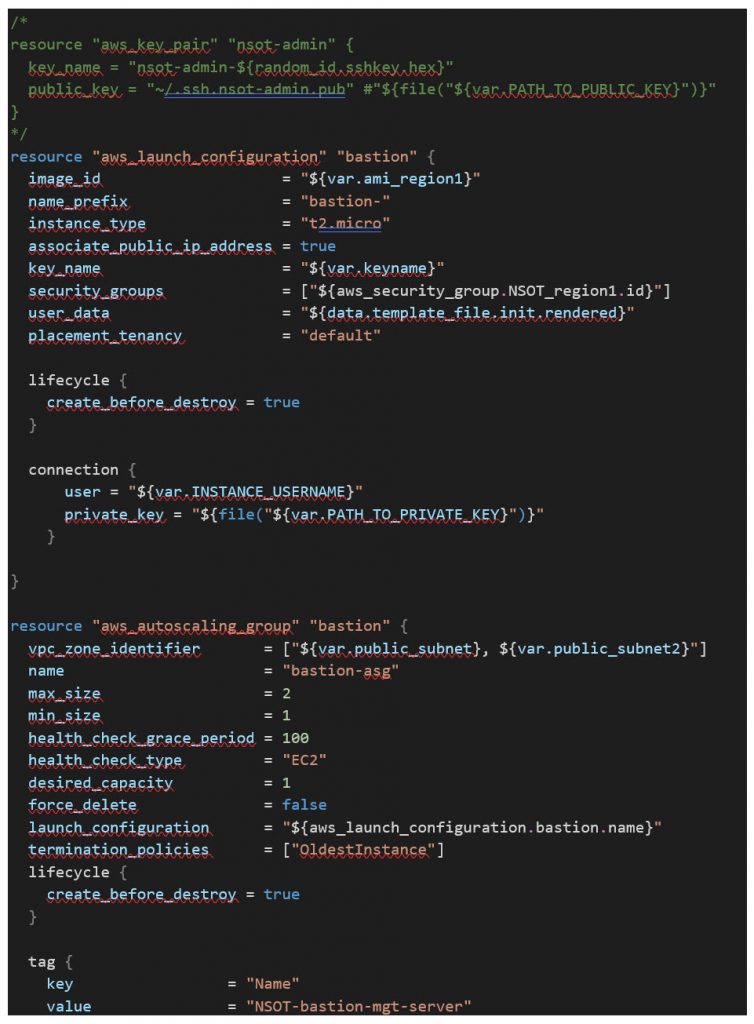

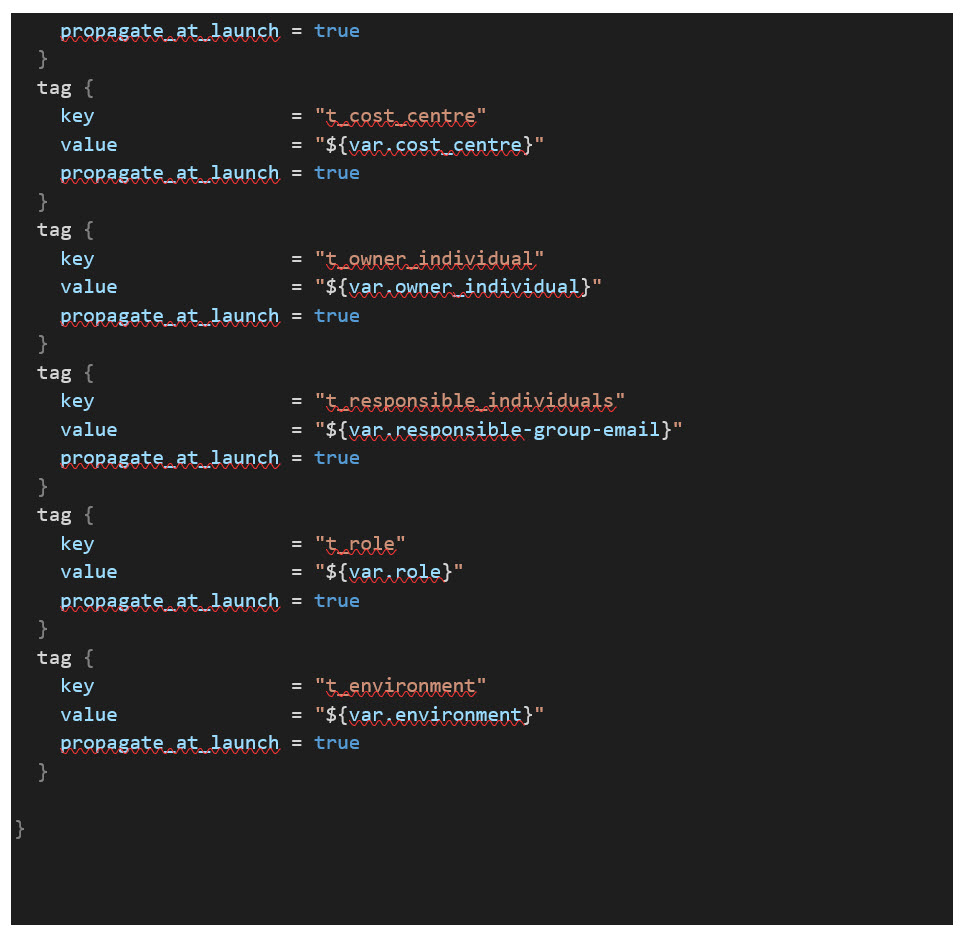

Next, let’s break down what’s going on in the launch configuration resource and autoscaling group resource code:

File: Init.tpl

That’s it! Now you have a redundant

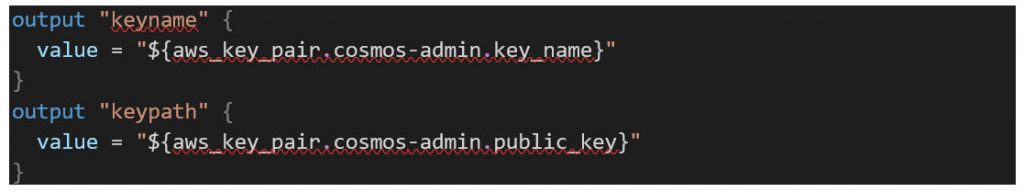

/modules/nsot-frontend/outputs.tf

Now that we have a working pair of

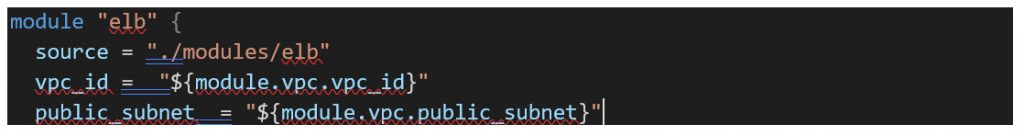

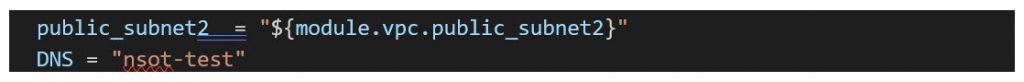

/main.tf

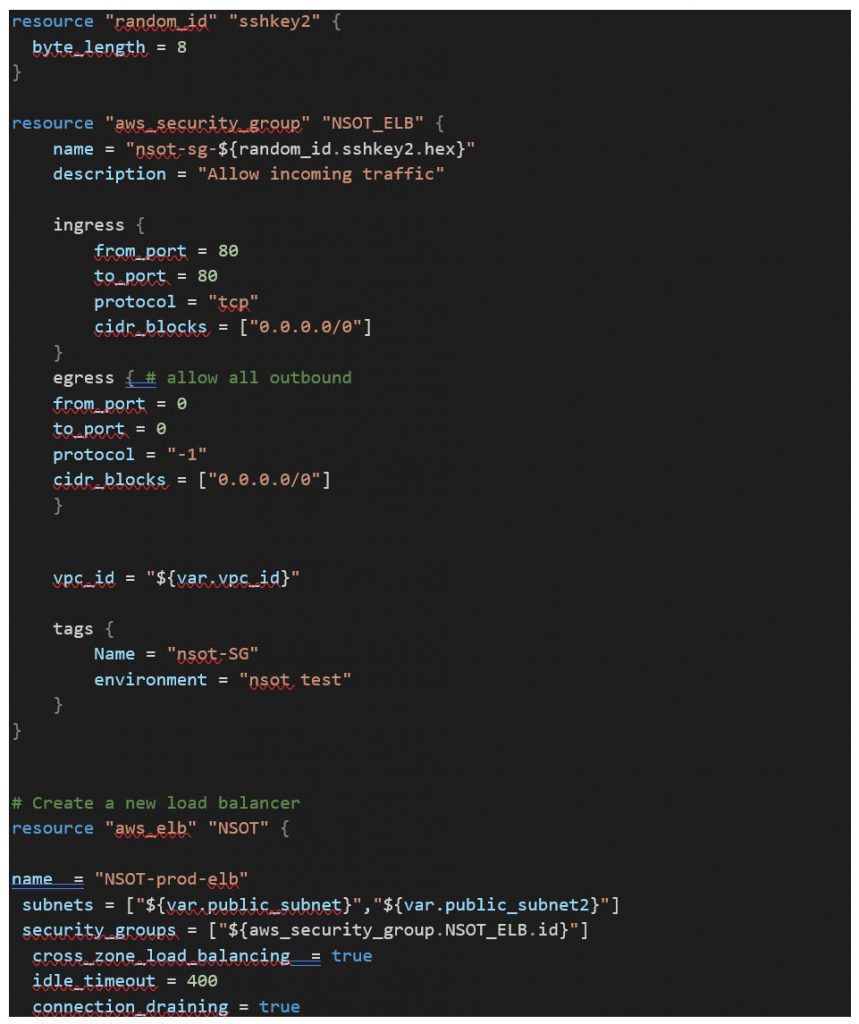

/modules/elb/main.tf

Great! Now we have everything we need to deploy a fully functional IPAM solution. The final module creates a redundant bastion/mgt host using an autoscaling group again along with a config template to add a few administration tools to manage your MySQL RDS cluster and the

File: /modules/bastion/main.tf

This completes the series of articles to walk through the Terraform code to build a complete opensource IPAM solution in AWS. The video link below will guide you through cloning the Prometheus LLC repo as well as the terraform commands to run to deploy this entire project. Happy Hacking!